The world of GPU rendering is undergoing a seismic shift, driven by breakthroughs in hardware, software, and cloud computing. From hyper-realistic gaming visuals to immersive virtual environments for film and architecture, GPU rendering is faster, smarter, and more accessible than ever. But how did we get here, and what’s next?

Virtual GPUs take over the cloud

One of the biggest game-changers are virtual GPUs (vGPUs) and cloud rendering. Companies like NVIDIA, AMD, and major cloud providers are racing to dominate this space. Take NVIDIA RTX Virtual Workstation (vWS): it lets creatives tap into cloud-based GPU power for rendering, freeing them from local hardware limitations. This shift is a game-changer for studios tackling massive projects like blockbuster films or AAA games.

Cloud providers are leaning on GPUs like the NVIDIA T4 and Tesla series to offer scalable computing for rendering and machine learning. For instance, Weta Digital leveraged cloud resources to render Avatar: The Way of Water, slashing production time. To render scenes in the film, Weta used a 930m²-server farm with 4,000 servers with a total of 35,000 processor cores running the Ubuntu operating system.

Ray tracing + AI

Ray tracing is now mainstream thanks to NVIDIA’s Ampere and AMD’s RDNA 2 architectures, which mimic light behavior with jaw-dropping precision. But ray tracing is resource-intensive, which is where AI technologies come in.

- NVIDIA DLSS uses neural networks to upscale images, letting mid-tier GPUs handle ray-traced titles like Cyberpunk 2077 at high settings.

- AMD FSR takes a similar approach but skips AI, making it compatible with more devices.

AI is not just for games. Tools like NVIDIA’s OptiX Denoiser cut rendering times in Blender and Maya by cleaning up noise in complex scenes.

Next-Gen architectures

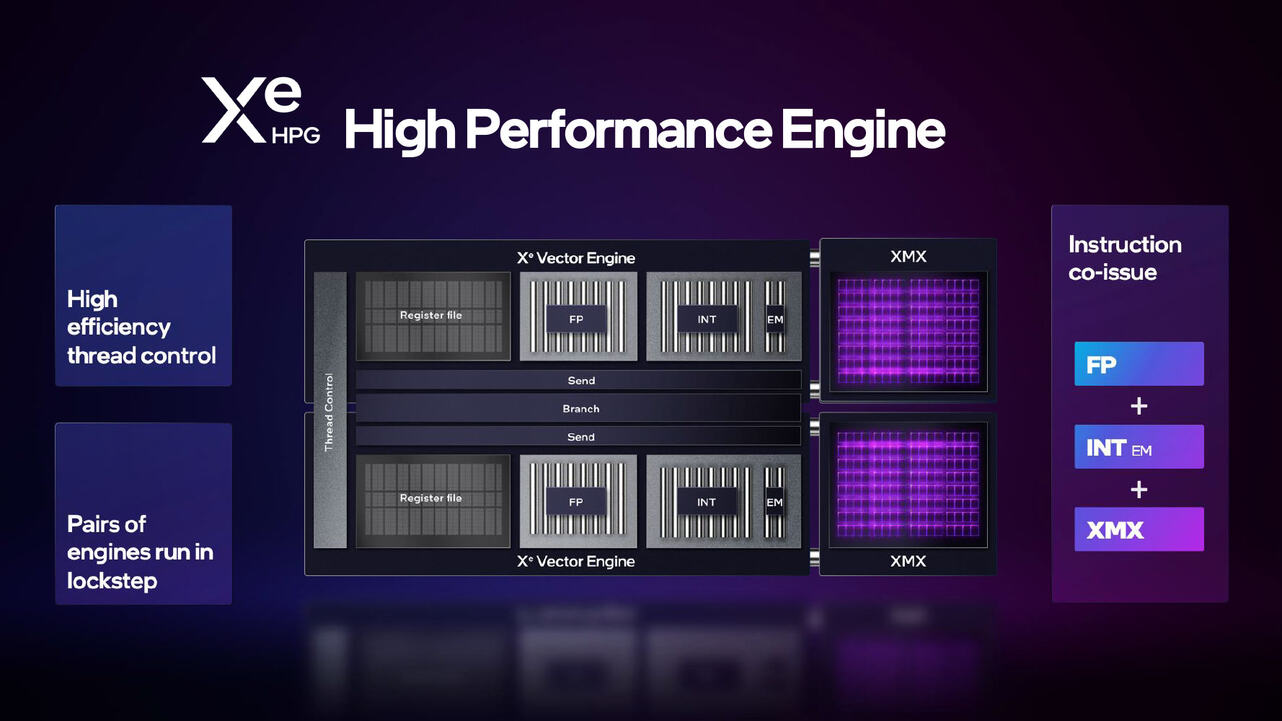

Modern GPU architectures—NVIDIA Hopper, AMD CDNA, and Intel Xe-HPG—are built for rendering and machine learning.

- Hopper dominates data centers, powering real-time virtual worlds.

- CDNA drives professional workstations for animation and VFX.

- Intel’s Xe-HPG (in Arc Alchemist GPUs) brings ray tracing and AI to the consumer market, partnering with developers for optimized performance.

Game engines like Unreal Engine 5 and Unity are revolutionizing real-time rendering. Features like Nanite (micro-detail geometry) and Lumen (dynamic lighting) deliver cinematic quality without pre-rendering. Industrial Light & Magic used UE5 to create The Mandalorian’s virtual sets, letting filmmakers see final shots in real time.

Architects and designers are also actively using these technologies to visualize projects. For example, Foster + Partners uses Unreal Engine to create interactive presentations of their architectural projects.

Why cloud GPUs are winning

Cloud GPUs are popular with professionals for a number of reasons. Firstly, cloud solutions allow you to scale resources according to your needs. For example, for large projects, studios can rent additional GPUs in the cloud to speed up the rendering process. This is particularly useful for those creating movies or games with a high level of detail.

Second, cloud GPUs reduce hardware costs. Instead of investing in expensive workstations with powerful GPUs, studios can use cloud resources and pay only for what they use. This makes GPU rendering more affordable for smaller studios and independent developers.

Thirdly, cloud GPUs offer flexibility and mobility. Professionals can work on projects from anywhere in the world. This is especially true in the remote working environment that has become the norm since the pandemic.

An example of the successful use of cloud GPUs is Pixar Studios, which used cloud resources to render the film Soul. This enabled the studio to significantly reduce production time and hardware costs.

Rendering’s industry-wide impact

- Film/TV: Faster rendering means faster release. Pixar’s Luca saw GPU-powered render farms crunch frames in minutes, not hours.

- Gaming: Ray tracing + AI = immersive worlds.

- Architecture: Real-time visualization accelerates decisions. Zaha Hadid Architects uses GPU rendering for interactive 3D client walkthroughs.

What’s next? Five trends to watch

Quantum Hybrids

Early-stage quantum computing could boost tasks like global illumination or fluid simulations. Hybrid GPU-quantum architectures might unlock unprecedented realism. Companies are already working on hybrid architectures that combine traditional GPUs with quantum processors. This could open up new horizons for creating ultra-realistic images and simulations.

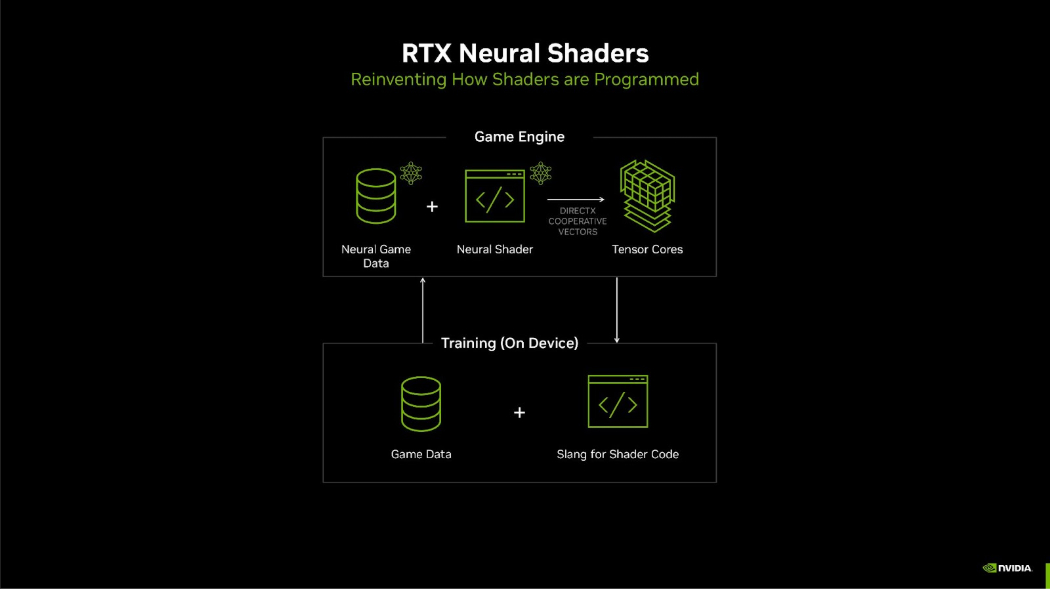

AI optimization and neural rendering

Neural rendering will generate textures and models via data, not math. Imagine AI predicting a scene’s look before rendering. For the film and gaming industries, where rendering time is critical it is a real lifesaver.

Cloud rules everything

Expect cloud rendering to become the default, offering:

✓ Instant scalability

✓ Democratized access for indie devs

✓ Borderless collaboration

Ray tracing goes mainstream

Beyond gaming, architects will use real-time ray tracing for lighting-accurate client presentations.

Rendering for the Metaverse

Virtual concerts, events, and workspaces demand GPU-heavy worlds. Unreal Engine 5 is already paving the way.

AR/VR integration

GPUs will power lifelike AR apps — think virtual fitting rooms (like Microsoft’s HoloLens 2) or medical training simulators.